There seems to be a lot of confusion around what companies like Fastly (FSLY) and Cloudflare (NET) do and why their technology is so special, so I thought I'd give an overview of how this industry sprung up over the years and where it’s going next. But, especially, why there is so much excitement about their potential, both now and going forward. If you are still thinking of them as CDNs, you are missing a large part of the bigger picture. So grab some popcorn, and expect a few epiphanies along the way. And as a bonus: I am finally taking inspiration from Ben @ Stratechery, and drew out some concepts to better explain. (All diagrams by me. You've been warned!)

To start off with, let's walk through how we got here, and learn about CDNs! (Oh boy!)

Content Delivery Networks (CDNs)

CDNs are an industry that have been around for a long while, being a mainstay in the architecture of the world-wide web. Let’s walk through what they solve by learning some terms:

- Origin servers = Servers that a company maintains to distribute content & data (i.e. APIs, files, video streaming), hosted in the cloud or a physical data center. It costs a lot to maintain your own infrastructure, so companies look for ways to reduce costs, by reducing the server load (less infrastructure needed to serve up peak demand) and the traffic to it (less inbound and outbound traffic costs to that infrastructure). Origin servers can scale up and down down under container- and serverless-based architectures, but this does not address high bandwidth costs and high latency.

- Bandwidth = Amount of data flow capable at one time (the size of the pipe you are pushing the traffic through). More bandwidth equals more network actions that can be occurring simultaneously. However, this also means more data transfer costs for the company running those servers. Cloud IaaS providers not only charge for compute time, but also for amount of data transferred in and out. For on-prem, ISPs typically cap the max bandwidth allowed, so traffic can only scale so much without huge increases in costs.

- Latency = Amount of time it takes between a user action (request) and the results it generates (response). Lower is better, as it makes for a responsive application or service. If you are a gamer, you are well aware what latency is: high latency means lag, which is when you are mashing the buttons but it takes a few extra moments for your character to move. If you have high latency in a multi-player game, low latency players are going to run circles around you as they take you out, then do little dances over your body.

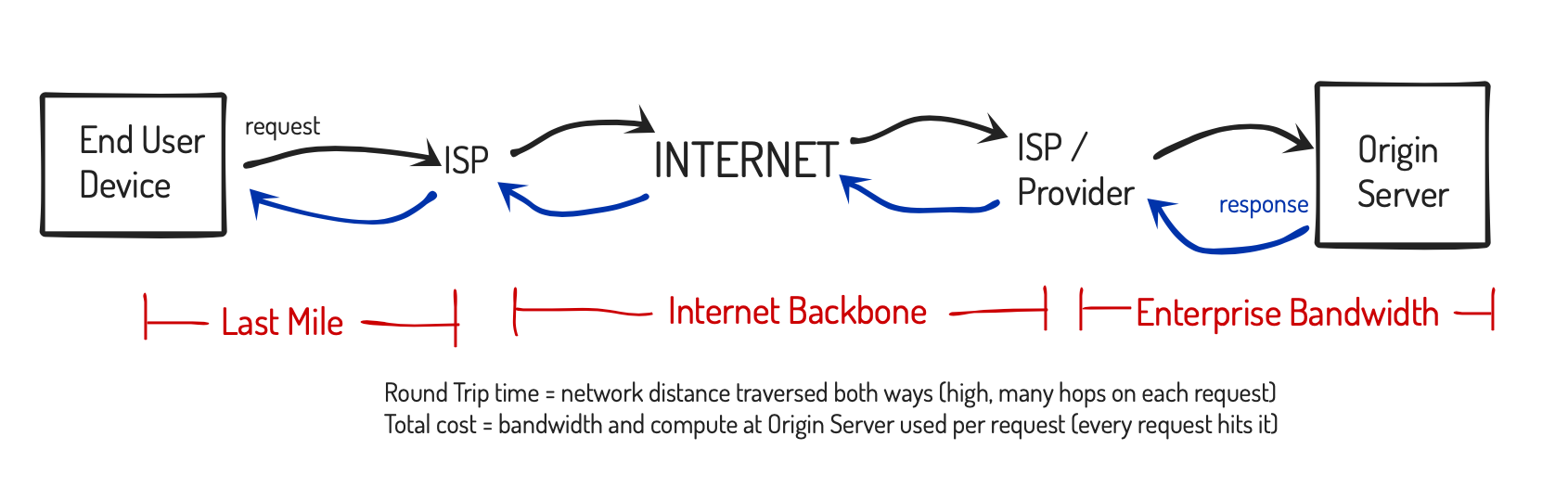

- Round-Trip Time (RTT) = Overall latency measure of the total time it takes to get a response back from a service. With websites and apps, this means the sum of: the time of transit from the endpoint device making the request to the server… PLUS the time the server takes to receive it, act on it, & respond… PLUS the time of transit to send the response from the server back to the endpoint. It is impacted greatly by physical distance, and is then magnified even more in complex apps that are making multiple requests to the server continuously.

- Last Mile = The final networking step between end devices and the internet, generally from the ISP to the end device. CDN's try to place their cache servers as near to the ISP in order to lower latency as much as possible.

- Hop = When networking traffic jumps between networking devices, typically going from one network to another. Using traceroute, I can see that between me and nytimes.com is 40+ hops (with the Last Mile of my ISP to me easily being the first 6-7), as the traffic makes it way from my home network to my ISP and across the internet to their service. More hops (network transfers) equals more RTT.

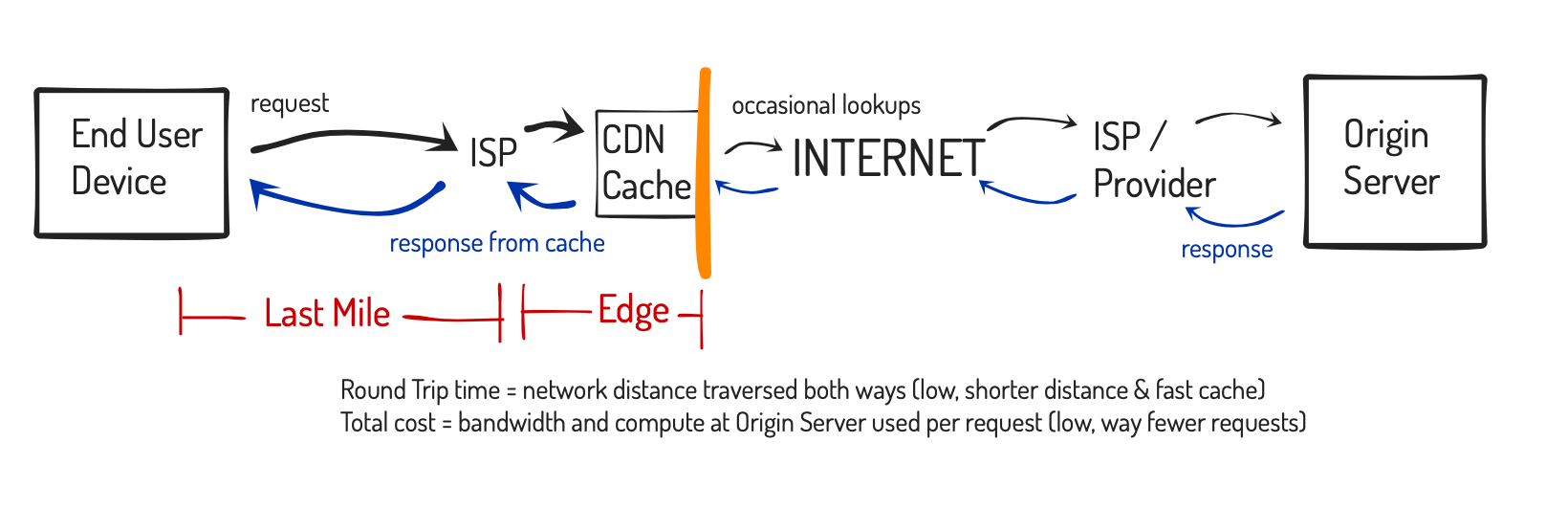

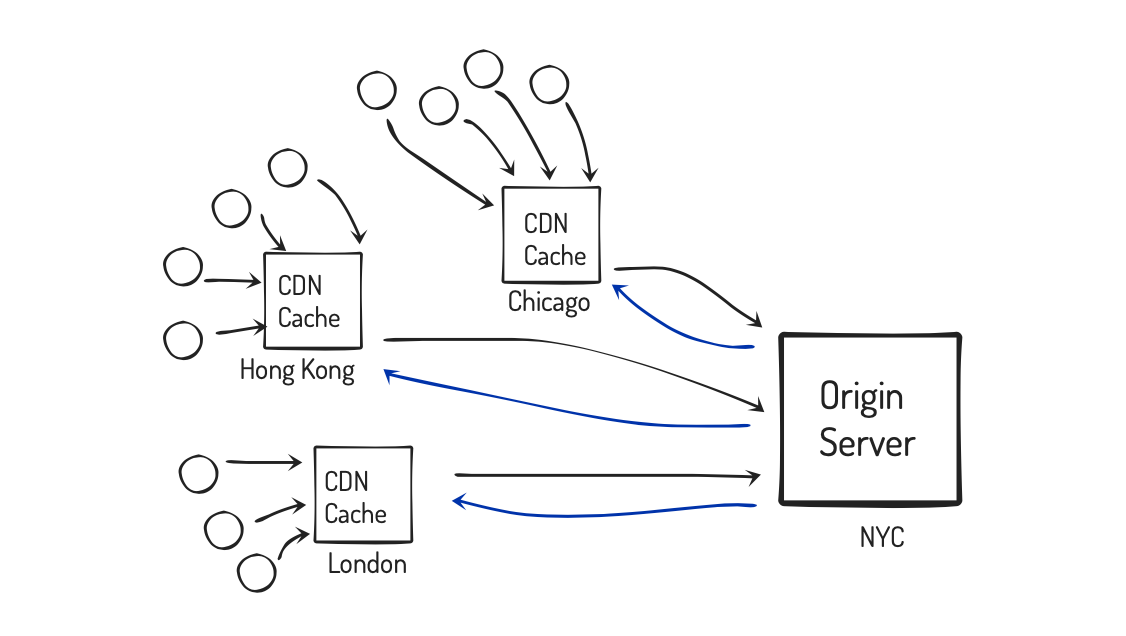

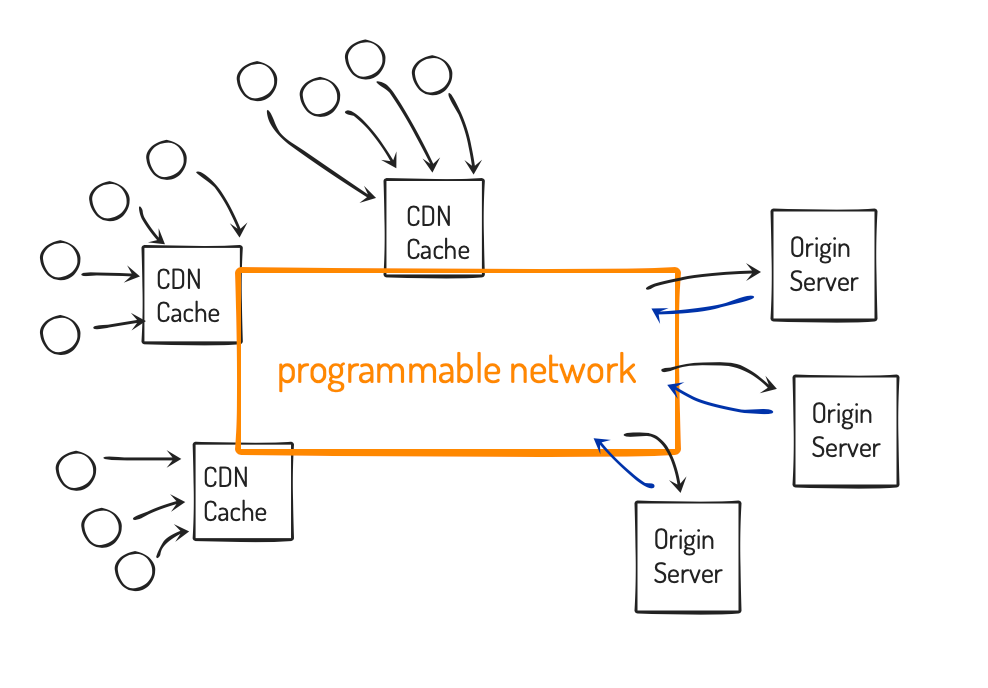

- Content Delivery Network (CDN) = Localized caching services that are able to greatly reduce the load on origin servers & the bandwidth to get to them. These are globally positioned, in order for end users to hit the cache nearest them, greatly reducing the latency to end users. RTT is drastically lowered, which makes for a faster and more responsive app or website. The cache gets loaded from the origin server, either from each cache doing it separately, or with one acting as master and distributing it to other caches in the CDN's network. This greatly reduces the number of times that data is requested from origin servers, which in turn reduces infrastructure and bandwidth costs.

A traditional request/response cycle of a web application or service looks something like this, with many transfers across inner networks (hops) at each step.

With a CDN acting as cache, the content will be much nearer, and the RTT much faster:

Example: NYTimes has a publishing platform, with infrastructure that hosts its content, and management software to track that content & handle the workflow around publishing new submissions. NYTimes.com doesn't serve up articles from its NYC data center or its AWS infrastructure for every global user -- it distributes the content across the globe to CDNs, in order to have it closer to the users accessing it, and to greatly reduce infrastructure & bandwidth costs (outbound traffic from its servers, and the extra load on those servers). A user in Singapore might be pulling content from a CDN cache in Jakarta (12ms latency), instead of from a server in Washington DC (275ms latency). [Actual values.]

That's just talking about static content, like corporate websites, published articles, e-commerce product listings, product manuals, etc. The need for low latency and lowering traffic impact scales up a lot more for video game feeds and streaming video services. You can push video content from your origin server once to a CDN, and that cache can then be serving up that playback to millions of viewers from the cache, instead of having that heavy networking load on your company's servers.

So basically, CDNs are a service that host content at the "internet edge" in order to be more local to users across the globe. When CDNs tout they are "a single hop away", what they mean is from ISPs (not users). CDNs have thus gone wild with creating POPs across the globe, just to remain fast (and relevant).

According to industry leader Akamai, “CDNs carry nearly half of the world’s internet’s traffic.” This is an industry with a lot of players, including AWS with their CloudFront service. Lots of content-related capabilities have subsequently cropped up, as CDNs try to stay competitive.

- Cache mgmt interfaces - APIs that customers can use to manage cached content

- Purge - remove or refresh cache content

- Dynamic content delivery - not just static content [i.e. blog post comments]

- Prefetch - get the content before the users ask for it

- Origin shield - have one cache act as master that is the only one that talks to origin, and it then distributes content to other caches

- Compression - make your content smaller and so less costly to transmit & store

- Image optimization - compress images while maintaining high resolution

- Video CDN - focusing on the more complex capabilities and compression formats of video streaming for video on demand (VOD)

- Live video streaming - extending the Video CDN to be geared for broadcasting live events, like sports and concerts

These kinds of features have allowed certain CDNs to get ahead of others in the market, but generally, all of them have caught up and now offer most/all of these.

Network Architecture

Let’s talk about network architecture a bit, as it matters greatly in how CDNs are positioning themselves in the market. CDNs utilize an architecture that serves up content from cache servers that are inter-networked and dispersed across the globe into key locations.

- Internet Exchange Points = Major intersections of global internet traffic, typically the boundaries between major carriers, ISPs and CDN networks.

- Edge server = Strategically placed server that is geo-located to be as close as possible to the Last Mile of a wide body of endpoints (end devices making requests). In CDNs, this is what is running their platform software in order to cache and serve up content.

- Point of Presence (POP) or Edge locations = Physical hosting location of the nodes in a global network, consisting of network infrastructure that is housing one or more edge servers. They are typically located in large cities, near Internet Exchange Points.

The easy path that CDNs have positioned themselves into (and are taking advantage of) is the fact their global networks sit between a customer's users and its servers. They are able to expand into new services beyond what a CDN is traditionally thought of, such as:

- Web Application Firewall (WAF) - protects APIs and apps by filtering the incoming traffic before it gets hit

- Distributed Denial of Service (DDoS) protection - mitigating attacks trying to swamp your services with requests; because the cache is being hit, your origin servers are protected

- Load balancing - sits over your origin applications or APIs to allow splitting traffic to different origin services, optimizing how global traffic disperses and allowing services to easily scale

Cutting edge platforms were able to provide some distinct benefits early on. But the rest of the CDN market have already caught up here too, and do these same things.

Between the Edges

So that is CDNs in a nutshell, and I don't care about ANY of those capabilities above — except in how they funded all the infrastructure efforts that are now being leveraged into edge networking. All of the above CDN services are in a VERY commoditized industry. Akamai (AKAM) is the 800lb gorilla in this space, and the upstarts can and do differentiate, by being more visible in pricing, and offering more and more of the CDN add-ons and new features in one platform. Limelight (LLNW) tries to differentiate with video & live events, Fastly (FSLY) by being so developer-focused and speed-obsessed, and Cloudflare (NET) by being so developer-focused and by upsetting the pricing model to a flat rate (instead of consumption-based), including a very generous free tier.

But there is a drastic difference in strategy on how these CDNs set up the architecture of their POPs and the networking between them. This is what differentiates Fastly and Cloudflare from most legacy CDNs, and enables them to be so developer-focused.

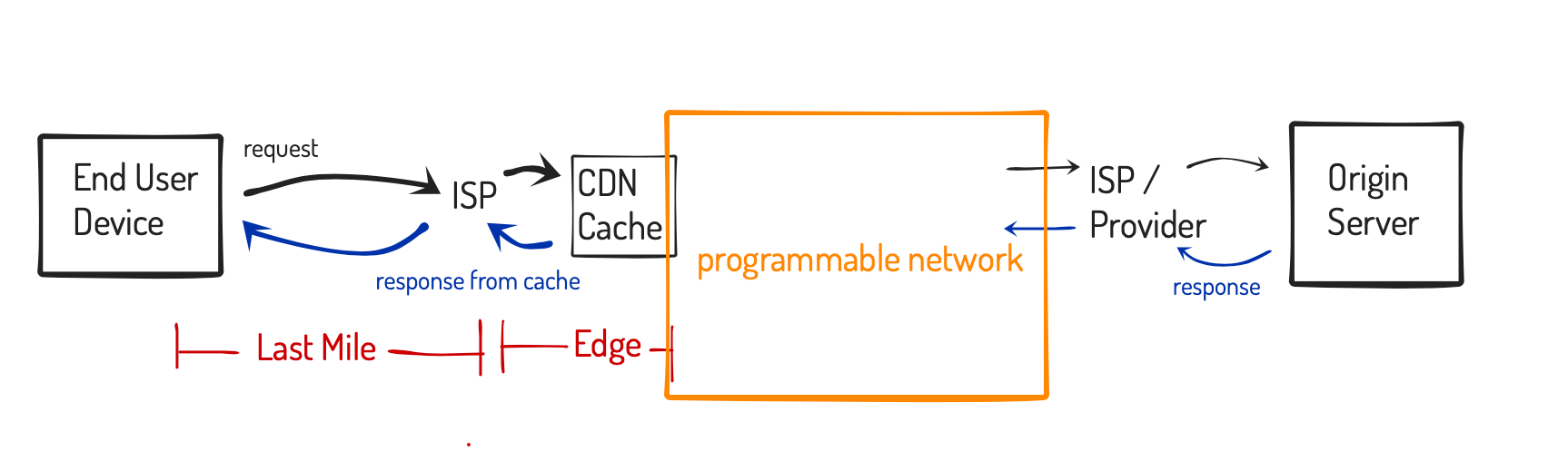

Programmable Networks

The more modern CDNs are building their infrastructure in a developer-focused way, exposing APIs to allow customers better control over the functionality of their platform. This makes the network between edges programmable. Edge networks that were built from software-defined networking (SDN) architectures better allow for this flexibility and customization. Programmable edge network APIs can be utilized as building blocks by customers, allowing them to better tie these content management & networking capabilities into their own software platforms and services. They can automate their workflows with their other tools and platforms, and tightly control the behaviors of their content and the network flows in custom ways.

Why don’t all CDNs provide this level of control? Because they are frozen into their existing network architectures with hardware appliances, amplified by the heavy number of POPs they then created that they must inter-network and maintain. Akamai has 216 thousand POPs, but this is NOT an advantage, it is a hindrance if they now want to pivot their architecture! Akamai also now has an edge compute platform in beta – which, I imagine, for developers, is like using a monolithic Oracle database (Akamai) instead of being nimble with MongoDB (Fastly). The fact it is not over a highly programmable network architecture would seem to greatly hinder that effort from the start.

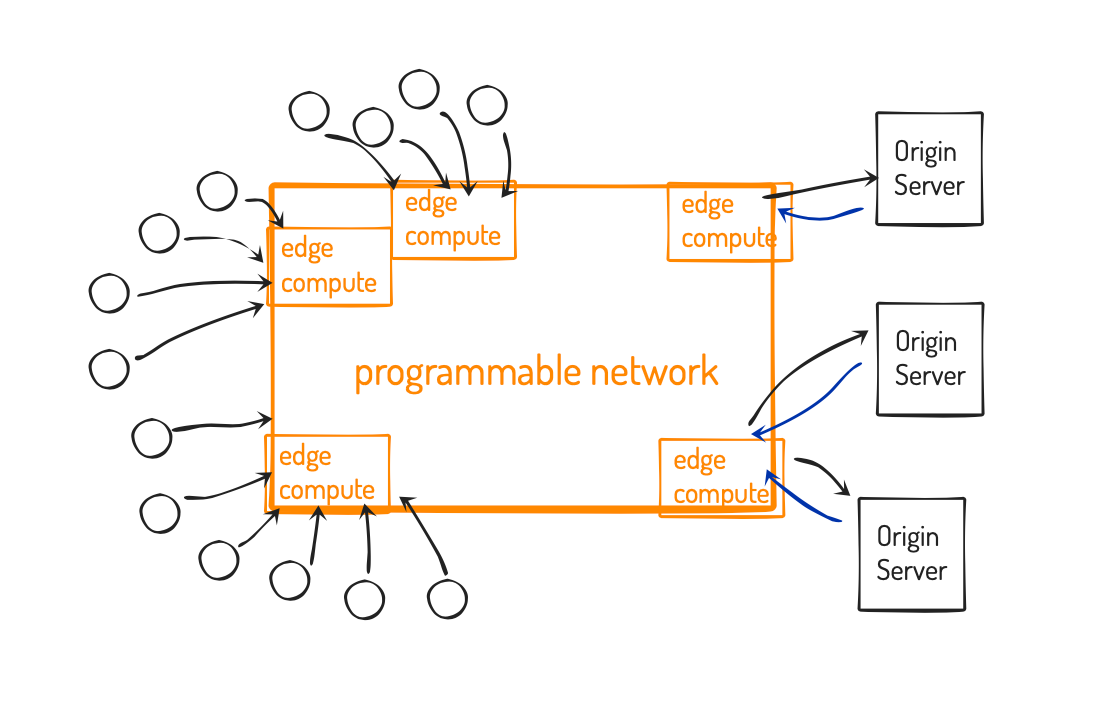

Next-gen CDNs that use programmable SDN devices are able to allow precise control over their entire global network, so now have a lot more flexibility when it comes to edge servers and how to deploy them strategically. Fastly made the conscious decision to make these single servers very powerful (foreseeing the coming edge compute capabilities needed), and so needed far fewer POPs to maintain and inter-connect. This strength that Fastly and Cloudflare have built into their architecture further bolsters that CDN benefit I mentioned above – with edge networks, their programmable global networks sit between a customer's users and its servers.

Being a programmable network means they can take all those networking capabilities listed above (WAF, DDOS mitigation, and load balancing) and make them smarter and faster. It allows for services to inject more logic within the networking itself, and changes can propagate instantly. They can apply logic at any layer, in order to programmatically scale and adjust networking flows as desired. This enables features like:

- Real-time control - allows for changes to be made & propagated more quickly across the edge network, as change logic can be handled inline

- Context-aware routing - allows traffic to flow across different network paths and make priority decisions based on request specifics, like what the content is (file vs video) or from any user metadata in the request (user settings, device used, the geo-location, etc)

- Traffic scalability - gives networking services like WAF, DDOS mitigation & load balancing the ability to scale when hitting peak traffic or demand

- Global internet "fast lanes" - detect & automatically circumvent problem areas and outages, sidestepping slow areas of the internet

- Infrastructure-agnostic distribution - network logic can control what infrastructure things are routed to (cloud, multi-cloud, hybrid, or on-prem) based on real-time concerns like context, traffic, demand or network conditions

Fastly and Cloudflare took very different tacks from the legacy CDNs in how they built up their global network of edge servers and how those systems intercommunicate. This makes a VITAL difference in edge networks' architecture over the ones built by traditional CDN providers.

Livin’ on the Edge

Those crucial early architecture decisions are now allowing them to leverage their existing platform and pivot into new and exciting directions. Fastly took a sharp turn towards this strategy back in April 2017, calling it their edge cloud platform. Cloudflare joined them soon thereafter, opening up their global platform to 3rd party apps in June 2017. That eventually lead them both to edge compute, with Cloudflare Workers in 2018, and Fastly now beta testing Compute@Edge.

The term edge cloud platform is a clear and appropriate name for their industry, but I prefer the simpler edge network, as it really focuses on the core of where its power lies -- a global network that can provide services on the traffic that flows between its borders. The power of edge networks is between its edges. It sits entirely between the services and the endpoint devices using them, as well as between all the edge servers themselves (the entry points into that network).

And now, beyond their programmable global networks, comes an even more exciting point -- these companies are adding in programmatic capabilities into the edge servers themselves, in what is called edge compute. This allow developers to run programs out on the edge, on servers that are extremely close to the last mile of ISPs and their users.

People focus heavily on what edge compute can do as a standalone service - and it is rightfully exciting. But they aren’t taking in the full picture that the edge network covers it all. It is the overall combination of programmable edge network AND programmable edge compute that excites me about this forward-facing industry. They have built global networks for their own purposes, but designed their architectures to ultimately allow programatic capabilities over the network and its edge servers (entry points) that can now be pivoted into MANY new directions.

The lightbulb you might get at this point is this: the potential with edge networks is not just about the compute being put up at its edges, the potential is with THE NETWORK BETWEEN THEM ALL. Edge networks are an edge cloud platform over both the network AND its edge servers, and can oversee all the traffic between them all. This focus on being a programmable global network between edges now allows a lot of benefits that go way, way beyond localized content caching and intermediary security features that CDNs offer.

It's not just a platform to benefit their customers; the edge network platform itself is now able to create many, many additional applications (product lines) from here. The CDN capabilities that got them to this point are now JUST AN APPLICATION (the first one!) that was built on their edge network. New product lines that are sure to arise mean TAM expansion, which will propel their revenue growth rates to far beyond what mature CDN providers typically see.

Now come the platforms and the product lines being built on top of it. Zscaler (ZS), the next-gen Zero Trust security-as-a-service (SECaaS) provider, has the exact same type of global networking architecture. I consider them an edge network as well, albeit one solely focused on cybersecurity protection of network traffic between edges, including the last mile on either end. Another focused edge network is Agora (API), who built an API service for real-time video and audio, then augmented it with a global edge network optimized for real-time video delivery. Any of the other more generalized edge networks can build similar things — and they are!

It is long past due to stop thinking of Fastly and Cloudflare as being a part of the commodized CDN industry – that is simply how they got to this point. Their next-gen architectures allowed them to pivot way beyond those capabilities, however. We must start thinking about these companies, along with Zscaler and Agora, as global edge networks -- regardless of what route they took (cybersecurity VPN replacement or a CDN caching content) to get to this point.

Let's talk about some ways they can leverage their edge network from here. You'll notice the points below all build upon themselves. This is where it gets really exciting...

Edge compute over programmable networks

Edge servers can act as micro-cloud platforms, in order to bring serverless compute & storage capabilities closer to the endpoints. Their edge servers are already geo-located near to the endpoints accessing it, in order to greatly reduce latency and traffic costs. So this is an extremely lucrative side-benefit to building out the network of CDN servers.

Endpoints are typically non-powerful devices (such as mobile apps, browser-based websites, cameras, POS systems, etc) that interface with a server elsewhere that is making the decisions. The compute is normally back in the core infrastructure somewhere – those origin servers we talked about before. It's easy to see that edge compute will be lowering costs over traditional cloud infrastructure – the more that compute can be taken off of origin servers, the lower the costs needed on infrastructure and bandwidth to handle peak demand. Since edge compute puts computing & storage capabilities extremely close to endpoints, there are very specific cases this helps with. Other "edge compute" platforms exist, like the one I mentioned Akamai has in beta – but without the programmable edge network that exists between them.

Handling networking logic at the edge

Remember when I talked about software-defined networking — not only do we have compute and storage capabilities available for custom applications, but those same edge servers can be controlling the network between themselves too. This powerful combination, of edge compute being the controller over the network flows, leads to multiple advantages over anything traditional CDNs are capable of.

These edge networks are already giant developer-friendly CDN networks where customers can tie into APIs in order to control their traffic & content flows across the network. Now they can run custom code out in the edge, too, in order to make logical decisions anywhere across that network. They can be making compute-intensive decisions within the edge network itself, not from one end or the other, based on the request or its metadata (context). Certain users or device types, or certain geo-locations, can take different paths in the networking and in the content routing. Premium users could get more bandwidth or faster routes. Companies could do A/B user testing, by sending a subset of users to different systems in order to test new features. This is all already possible to a small degree in existing CDNs with programmable networks, but edge computing greatly enhances the amount of computational logic that can be utilized, anywhere across the edge network. One possibility with edge compute controlling the networking between edges is in greatly enhancing cybersecurity features.

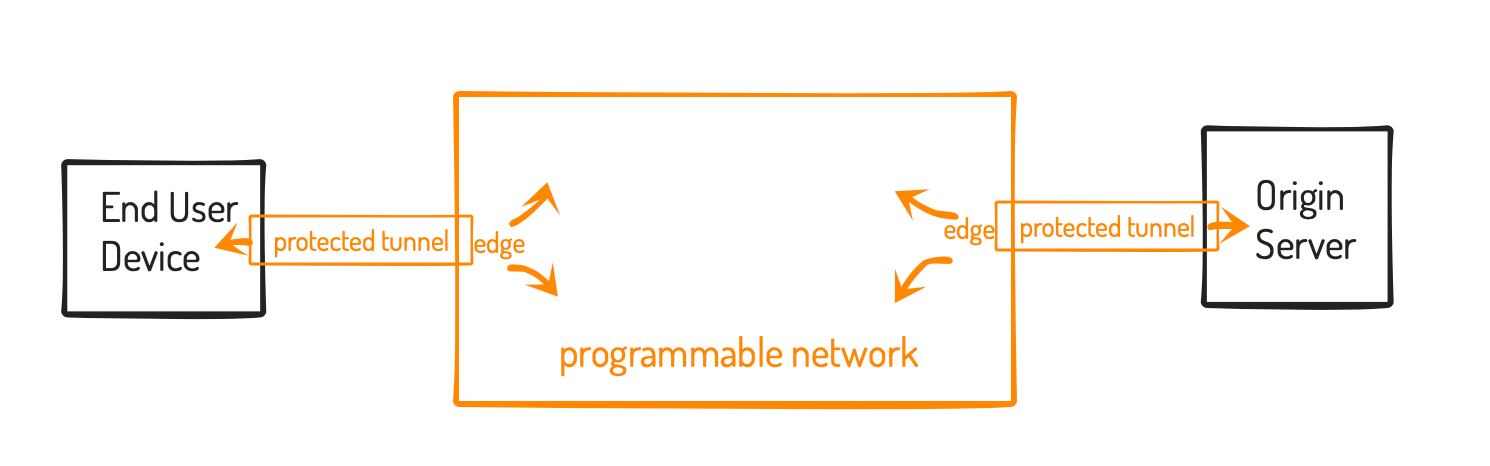

End-to-end security

Edge networks already protect all the traffic between the edge entry points (edge-to-edge). Traffic can travel securely most of the way across the globe entirely within their edge network, so that the only part of the journey that is unprotected is outside of the edges of their network – the Last Mile on either end. So why not extend that out further from the edges?

Edge networks already have a huge head start in this capability, in that they already protect their global network between all edge servers (POPs), and the edge servers haves become the entry points into it. In order to provide protection end-to-end, they have created applications to protect the endpoint to the nearest edge server (Last Mile of device), and from the nearest edge server to the enterprise service being protected (Last Mile of enterprise bandwidth). By creating secure tunnels extending their edge network on both ends, they can now easily provide end-to-end protection.

Both Zscaler and Cloudflare provide services that protect all traffic end-to-end across the entirety of their global edge network. Traffic from endpoints securely enter their global network and are now able to be protected all the way to the final destination (SaaS service, internal APIs, etc), then back again. They are protecting both outgoing requests (from a customer’s users to the SaaS services they utilize), and incoming requests (from a customer’s customers or workforce to the internal services they utilize).

Cloudflare has their Argo Tunnel product to bring their edge network all the way into your data center (protecting the edge-to-origin above). Their Magic Transit product goes a step further, and brings Cloudflare’s platform directly onto your enterprise network. They then added another product, Cloudflare Access, to protect incoming traffic, allowing users to safely access a company’s origin server, regardless of where it lives (in the cloud or an on-prem data center). This is accomplished through Zero Trust capabilities they have built, which are possible due to the software-defined networking architecture they've adopted.

Using those software tunnels, the only unprotected part left was the Last Mile between the endpoint (requesting device) to the nearest edge server. So Cloudflare then created a Secure Web Gateway (SWG) product called Cloudflare Gateway, to protect the traffic from the endpoint making requests to the SaaS services that an enterprise uses. Between outgoing traffic production in Gateway and incoming traffic protection in Access, Cloudflare now offers end-to-end protection of network traffic for an enterprise's users.

If this combo sounds familiar, it is because this is entirely what Zscaler (ZS) has built. They protect outgoing traffic from endpoints to external servers (ZIA, their Secure Web Gateway) and incoming traffic from endpoints to origin servers (ZPA, their Zero Trust secure access method). Cloudflare evolved their Gateway and Access products into a new competiting platform called Cloudflare for Teams. [See more details on Cloudflare for Teams in my Cloudflare deep dive from March.]

This shows what kind of architecture leverage these edge networks (aka edge cloud platforms) truly have. Cloudflare may have just doubled its TAM, with an ideal product for today’s work-from-home world. Note that in response to the pandemic, Cloudflare happened to make this new product line free for the first 6 months. Last earnings, they noted that they already have 1000 customers on it, so I expect the company to see start seeing a revenue boost in Q420. In addition, their end-to-end protection platform seems to be much easier to deploy than Zscaler's, which is complex enough to heavily require system integration partners.

Fastly, on the other hand, has not yet made protecting network traffic a primary focus, beyond the DDoS and WAF protection they already offer over origin servers. But just today, they posted a tech blog entry highlighting their extreme focus on security -- so it is clearly at the forefront of their minds. I would expect them to ultimately move into this market, just as Cloudflare has already done, and the blog post gave us a major hint that "there’s more to come on that soon".

For those edge networks already embracing network security features in their platform, there are many directions they can go from here, as Zero Trust efforts continue to evolve:

- Zscaler introduced a “B2B” product, that is essentially a groups feature over ZPA, that allows multi-company partnerships to secure intercommunication between themselves. Their users and their utilized services can intermingle as needed, controlled by an IAM service like Okta. I expect Cloudflare for Teams to start getting promoted in this same direction, as it is a product line that is not only ideal to secure an enterprise's internal workforce, but also to secure the partnerships they have with others.

- ML/AI security features can greatly extend these cybersecurity product lines, to improve the security both within the edge network (between POPs) and the gaps from POPs outward (endpoint to edge, edge to origin).

- Edge networks can start to develop other cybersecurity product lines, like Identity Management (controlling & managing authentication and access within the edge network itself), and Cloud Security Access Brokers (CASB, in order to strictly control and monitor workforce access to specific SaaS providers, and prevent using unauthoinstensiverized ones).

A fantastic tidbit emerged during Cloudflare's recent Serverless week – Cloudflare for Teams was developed on their Workers (edge compute) platform, and they expect ALL future applications to also be on created via Workers edge compute. (Yet another sign that CDN is now just an application.)

Focus on reducing latency for real-time responsiveness

Not only can you handle networking logic in how services and users intercommunicate, but you can better focus on responsiveness as a feature when an edge server is the one responding instead of origin servers. Go back to my Singapore latency above. An endpoint in Singapore running a complex app with continual intercommunication could be talking to an edge server that is 12ms away, instead of 275ms away. That difference is massive when dealing with two-way bandwidth- and compute-intensive use cases, like advanced gaming and AR/VR. Edge compute will absolutely be a vital part in getting latency down as much as possible in order to power the next generation of gaming & streaming visualizations. Edge compute could be making decisions around how to best maintain latency, such as stepping down the bandwidth requirements of a feed until the optimum combination is found, or lowering all of your traffic speeds across all simultaneously occuring network requests when you are nearing bandwidth capacity.

Extending the capabilities of endpoints

Since the latency is so low, the edge servers can better tie to being an extension of the endpoint device itself. Endpoint devices are not typically that powerful, so as compute and storage are needed, the endpoint can talk to the edge server nearest it to use low-latency compute as needed. Instead of running analytics or compute-intensive tasks directly on the end device (eating up its valuable resources), part of the software could be running in the nearest edge compute. This helps turn dumb devices into smart ones; for instance, helping an IoT device do voice recognition.

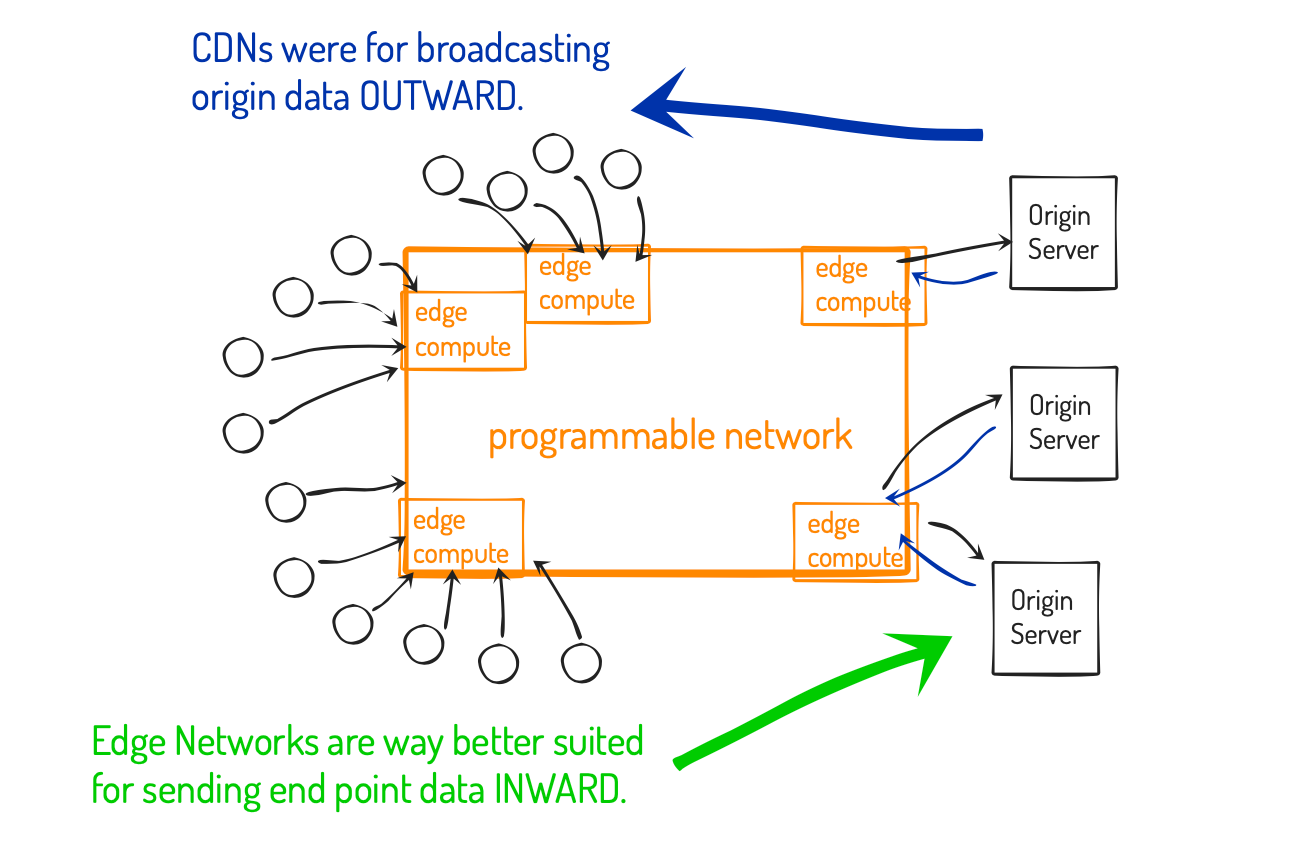

A global network that flows inward, not outward

CDNs are incredibly powerful way to distribute content from your origin servers out to your users. However, they were not built for collecting data from end points and passing it back. Having a programmable edge network (with edge servers as the access points) allows for data collection to happen globally, and edge compute can then be parsing and sorting and analyzing that data before sending it on to its final storage place.

Compute can be used for filtering data or running analytics over it, then only sending a smaller subset to the central core. For an incredibly simplistic example, an internet-connected security camera (endpoint) is sending a video feed 24x7 to a centralized data storage & monitoring system in the cloud. Instead of the high cost of having all that continual bandwidth and data storage, edge compute could sit in the middle, and filter the video feed down to only the sections of interest (say, when something is moving in the video feed). It could then make a compute-intensive decision to isolate and extract the 10% of the footage that is relevant, and upload just that portion to the core system, discarding the other 90% of footage where nothing is occurring. This would result in huge savings on the compute & storage costs of the core data systems, as well as the bandwidth into them.

This trend of sending endpoint data inward is snowballing. Gartner wrote in 2018 that only 10% of data is generated outside of data centers, and they expected that to rise to 75% by 2025 with the trends.

“Around 10% of enterprise-generated data is created and processed outside a traditional centralized data center or cloud. By 2025, Gartner predicts this figure will reach 75% …

Organizations that have embarked on a digital business journey have realized that a more decentralized approach is required to address digital business infrastructure requirements ...

As the volume and velocity of data increases, so too does the inefficiency of streaming all this information to a cloud or data center for processing.”

The highwater mark of data & network traffic being generated at the edge is at an all time high, and rising rapidly. Edge networks are sure to capture the bulk of that traffic increase.

Creating a more resilient network

Since all these edge servers can talk to each other, they can also better intercommunicate on their own statuses. This allows the edge network itself to monitor and keep itself healthy. Edge networks are inherently a dispersed network. Edge servers could be running monitoring and analytics over themselves and their nearest neighbors. ML/AI routines could be utilized in order to improve a server's processes, or determine when and who it passes requests on to if it gets overloaded. Edge servers can be auto-balancing the network load or help take the strain off of struggling resources. If one is getting swamped with endpoint requests more than others, it could be pushing the excess requests to neighboring edge compute nodes.

Taking advantage of global positioning

Since these edge servers are dispersed globally, these edge networks can take advantage of that positioning, and leverage edge compute to run rulesets on network traffic that are highly honed to the regulatory environment that they reside in. Edge compute nodes in Germany can be applying different rulesets than ones in Singapore. This is especially important in this era of data soveignity, where governments are beginning to mandate that customer data must reside in the country-of-origin. Even state-to-state in the USA, the servers could be applying different regulations on customer data or applying a different set of tax laws. The CEO of Cloudflare spoke heavily about this trend towards compliance in his recent blog post during their "Serverless Week".

Stitching together responses

In this dispersed environment, responses to user requests may be being generated from different locations, such as different origin systems w/in the same company, or from having different SaaS providers that are partnered together to combine their services into some final product. Edge compute could be utilized to build responses from different locations - some data residing in CDN cache, some in edge compute, and some from remote origin servers - then combining it and sending it back to the user as fast as possible. This saves at least an extra step (and additional latency) of compiling the response in a cloud or on-prem service.

Response handling could also be making compute-heavy, on-the-fly decisions about where to pull data from to deliver back to the end user. Playback of live video can be constantly shifting to the fastest back end, or routing the data feeds around problematic areas of the internet. Ad tech can be inserting ads into content based on user & context right at the Last Mile.

Running distributed, decentralized computing at scale

Edge compute will obviously be very useful in situations dealing with the interconnectedness of endpoints, especially where a service has to talk to endpoints continuously. This gets even more useful at scale, when the endpoints are globally scattered and each is talking to the edge server nearest to them. This is called distributed edge computing, an inherent feature in edge compute that gives edge networks a huge benefit over traditional cloud infrastructure.

People tend to think of edge compute as being a mini-cloud service that exists in a vacuum, just talking to endpoints nearest it. Yes, it can help bring services nearer to those endpoints, but it gets really interesting when those mini-clouds all start working in tandem with each other across the entire global network. They can be sharing data, or cross-coordinating requests. They could co-mingle data across multiple edge servers, say, to track an endpoint as it traverses across multiple POPs (as it moves around the globe). Any highly distributed data collection that occurs over different geo-graphic regions will greatly benefit, such as fleet, asset, infrastructure, or supply chain monitoring. But beyond that, the distributed analytics that this enables is something that could benefit ANY industry with heavy endpoint traffic (app economy, API economy, IoT, etc).

[I could make the distinction between distributed compute, where the edge computes are distributing the load across themselves, compared to dispersed compute, where edge computes are intercommunicating but working separately, such as separating work by geo-region. Intercommunicating edge compute nodes are capable of either or both in tandem. But really, you can call all of that distributed edge compute and be accurate – it all just boils down to the fact the compute nodes are inter-working with each other.]

As an example, for a GPS & data tracking system in a nationwide vehicle fleet, instead of sending all the data into a centralized location, each tracked vehicle (each endpoint) could be pushing their data on a frequent basis to the edge server nearest them, and that edge server would then consolidate the data from the endpoints reporting to it, filter & compress it, then forward it all to the centralized location. Now imagine what distributed analytics could do over that. Your ML process could be running across 50 edge servers, each handling a subset of nearby endpoints, and only sending the relevant results (a small subset of the data being collected at the edge) back to the origin servers. Or they could be working together, exchanging data & findings in order to strengthen the overall analytical model.

Handling endpoint traffic as it is massively scaling up

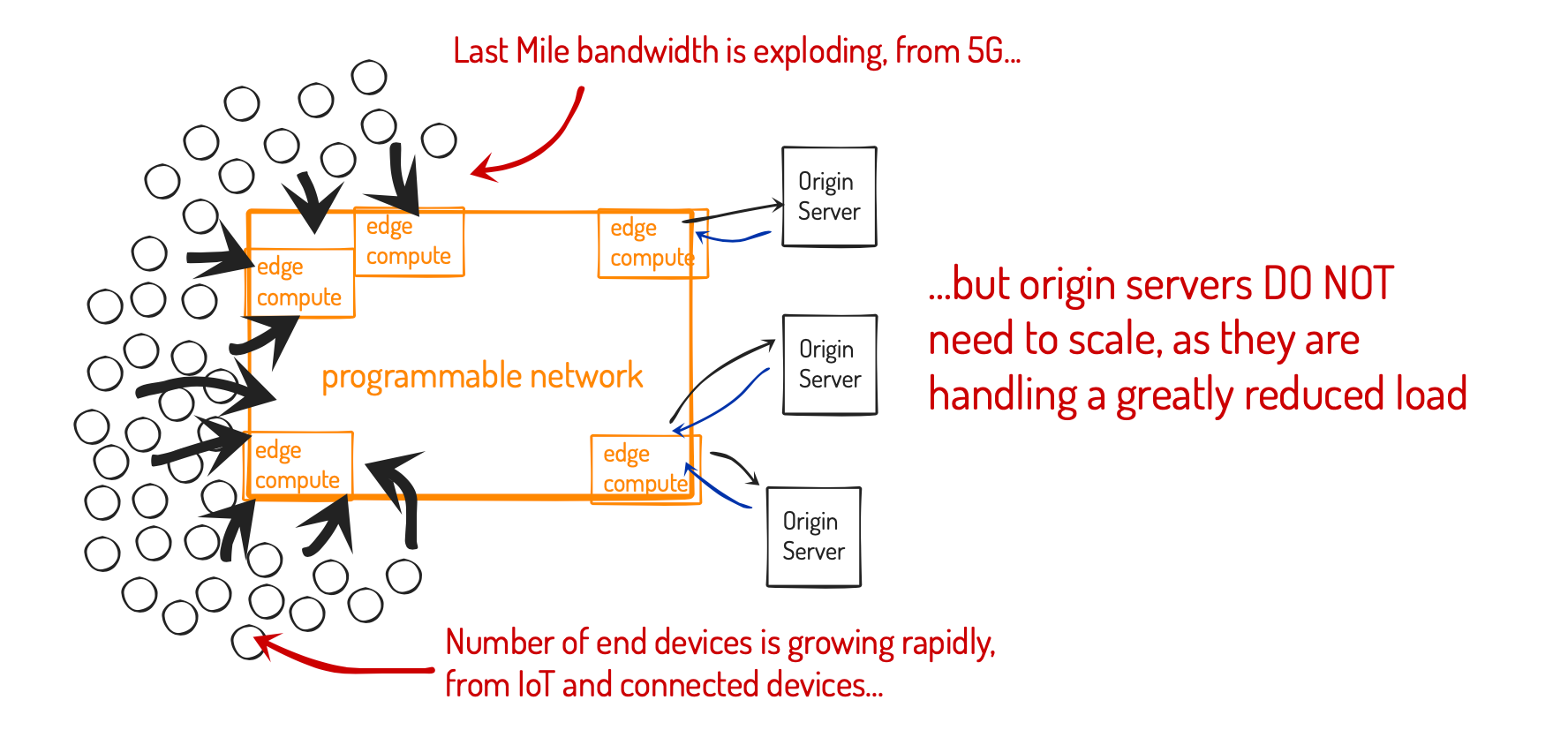

One enormous benefit of using software-defined networking (SDN) in their architecture is in its ability to scale. With edge networks, this allows for a VERY lopsided scaling of traffic, which massively benefits customers by keeping their infrastructure and bandwidth costs contained. 5G is greatly increasing the amount of bandwidth and traffic that end devices will be generating to ISPs. Meanwhile, the number of end devices is exploding, with IoT and connected devices that are controlling or observing out in the field. With edge networks handling the increased scale at the endpoint edge, the origin servers (and the cloud or on-premise infrastructure it all sits upon) do NOT necessarily need to scale.

Edge networks based on SDN have an inherent leg up over those maintain stoic piles of networking gear in each POP. Edge networks are not built the same, especially those from legacy CDN providers. This past quarter, even Zscaler had to use public cloud infrastructure in AWS and Azure to handle the unexpected 10x (!!) increase in ZPA traffic arising from stay-at-home. While that sounds good on its surface, it also means their pre-built networking architecture couldn’t handle that surprise of a rapid influx of new users. Fastly, on the other hand, is using custom software-based networking services on Arista switches; this allows them a lot more flexibility and capacity to scale.

Did I say developer-friendly?

Combine all that scaled networking with edge compute that can also scale. Fastly and Cloudflare are building edge compute development platforms that are incredibly fast, secure, and lean. They support next-gen serverless paradigms like Web Assembly (WASM), which compiles binaries (think .exe files) out of code in a wide variety of supported development languages. This then runs on browser-based servers (think of the browser as the operating system running the .exe), or a level deeper on systems using WASI (an extension of WASM that allows the compiled binaries to run on any system, not just browsers). Cloudflare is using the browser-based system (Chrome V8 Isolates), while Fastly made its own WASI compiler (Lucet). I am not sure which development architecture is better than the other, but I do not believe that speed is ultimately the guideline to watch. Both companies have developer helper applications that can be used to code, debug, test, deploy and monitor their apps within their respective edge compute environments. And both companies are leveraging all these tools themselves internally, as all new product lines are likely to be built on their development platforms.

Final Thoughts

Some final takeaways on the real power behind this new networking and compute paradigm called edge networks:

- Edge networks, like CDNs, can greatly reduce your outbound & inbound traffic to origin servers (cost savings in bandwidth and server/networking infrastructure). But they are so much more than this.

- Edge servers can be running compute and memory intensive tasks on the edge instead of at the origin.

- But going beyond standalone edge compute, these architectures also create a global programmable network between those edge servers (the entry points), and beyond (from edge-to-endpoint and edge-to-origin). This makes the true power of edge networks be BETWEEN the edges, not just edge compute by itself.

- Expanding edge compute across a network of edge servers, distributed edge computing can be doing heavier compute actions (like analytics) much closer to endpoints than traditionally done, with very low latency (by being so near the endpoints).

- The software-based networking architecture of edge networks can easily be handling traffic surges in a dispersed & distributed way. Edge servers can be dispersing traffic to other locations as needed, to better handle workloads. All that combines into a scalable platform that is capable of handling surges of traffic from either edge of the network (endpoint or service side). 5G and IoT is set to cause a huge increase in the endpoint side of the equation.

- Existing CDN capabilities are BUT ONE APPLICATION on top of edge networks. They are capable of much, much more. Cloudflare already has cybersecurity product lines over edge network traffic.

So what markets and industries might benefit from edge compute? The easy answer is always "IoT now and autonomous driving later", but I am not one to bet on aspirations, having to count on future success or trends that are not yet reality. I see many industries and product lines that could be leveraging edge networks RIGHT NOW, not 2-3-4-5 years into the future.

- IoT (disperse sensors, cameras, embedded devices, LIDAR, POS systems, etc) as more and more endpoint devices appearing

- 5G (increase of bandwidth on cellular networks, from endpoints to ISP) means the endpoint bandwidth will keep scaling

- High-speed, network intense applications, like AR/VR and gaming that require low latency

- Dispersed fleet & asset tracking, or infrastructure monitoring

- Device or vehicle AI (compute could be offloaded to nearest edge), ultimately leading to autonomous driving

- Voice-recognition systems (compute could be offloaded to nearest edge)

- Distributed analytics & ML/AI workloads (compute could be offloaded to nearest edge, then distributed)

- Data tracking & compliance systems across the global regulatory environment, which is becoming critical with the high number of data sovereignty laws now appearing

- Programmable ad-tech (moving closer to the endpoints they deliver to)

- Programmable video streaming (mid-stream adjustments in video manifest, or routing live feeds around trouble spots)

Both Fastly and Cloudflare are the upstarts taking this developer-friendly approach over their entire edge network architecture. This allows a customer to greatly tie into their services, and makes them stickier. But it also means that they are capable of far beyond what traditional CDNs are capable of. Fastly has been focused on being the fastest CDN, but their capabilities took off when they took the pivot of making it all programmable. Larger competition is sure to follow in their footsteps, but Fastly and Cloudflare have such a headstart, that I’m not sure they will ever catch up. Legacy providers have rooms full of existing legacy networking hardware to replace before they can even begin to replicate what Fastly and Cloudflare have architected.

So needless to say, I don’t agree with comments that Fastly is “just another CDN”, operating in what is now a lower-growth commodity business. Hopefully this helps you step back and better appreciate what these programmable edge networks are truly capable of. Customers will be making products with these building blocks, equivalent to what Twilio has done for communications – as well as the edge networks themselves. Sure, other CDNs and cloud providers themselves will try to catch up, but they have different focuses (mostly, keeping existing customers happy). The upstarts will win this battle, as both Fastly and Cloudflare are proving with their hypergrowth right now. But going forward, with the many benefits that a programmable, global edge network can provide... there is a lot more to come.

Unlike Cloudflare, Fastly is fortunately more usage-based pricing in their CDN features, so they are having a huge upswing in top line revenue right now (bouncing from 38% to ~55%+ in this upcoming quarter). They are excelling right now as the more developer-friendly and nimble CDN. The massive increase in internet usage over the past few months and likely the next several is a huge sail in their winds right now. But looking forward, their edge compute platform is just beginning, and I don’t think we’ll see the true benefits until they get out of beta in 2021. So with Fastly, we have an acceleration in top line growth occurring right now, that is feeding directly into potentially even more growth, coming soon from the next-generation of capabilities of their edge servers.

Cloudflare and Zscaler, however, have decided to focus heavily on security. This is massive new market that is especially relevant right now with work-from-home. Zscaler is using their Secure Web Gateway and Zero Trust protection capabilities across their edge network & cloud platform to protect the traffic of enterprise users. But unfortunately, they are hampered by poor marketing, a top-down sales process that involves a major overhaul of the corporate network, and a difficult to implement architecture requiring system integrators. Their growth has dropped dramatically, but the market still sees all their potential as companies continue to need help securing their traffic. And once you go Zscaler and Zero Trust, you don’t go back to the old network appliance ways. But I am not that interested in Zscaler, a specialty edge network, over the other more generalized ones. I feel that Cloudflare is a bit under the radar right now with all the hype around Fastly. They are a steady 48-50% grower just on their current CDN and edge compute product lines, and just added a network cybersecurity protection platform on top of it all, potentially doubling their TAM. Since Cloudflare for Teams was made free in the face of the pandemic (with its massive shift in work-from-home), we’ll have to wait a bit longer for the benefits. There is likely an acceleration in top line growth starting September, so the Oct-Dec Q4 quarter should really see a boost.

It’s an exciting time to be an edge network. Fastly now with the huge increase in usage, Cloudflare with its continued solid performance and upcoming cybersecurity product lines – and with both of these, all the potential both have NOW and in the FUTURE with their global edge networks.

Add’l Reading

- Fastly technical blog post on how it architected its network, using software-defined networking to allow for scale.

- Cloudflare CEO Prince's blog post on what Cloudflare is factoring as the most important features in its edge network. I concur with him that speed is but one requirement of many.

- Software Stack Investing’s recent technical deep dive into the architectural decisions made in the design of Fastly’s edge compute, plus his recap of Serverless Week changes just announced at Cloudflare.

- My earlier deep dive into Cloudflare in February. I sure wish I had studied Fastly more at the time to better understand their potential (and had invested in them then, months before I caught on). I have had plenty of success with NET since February, and now own both NET and FSLY.